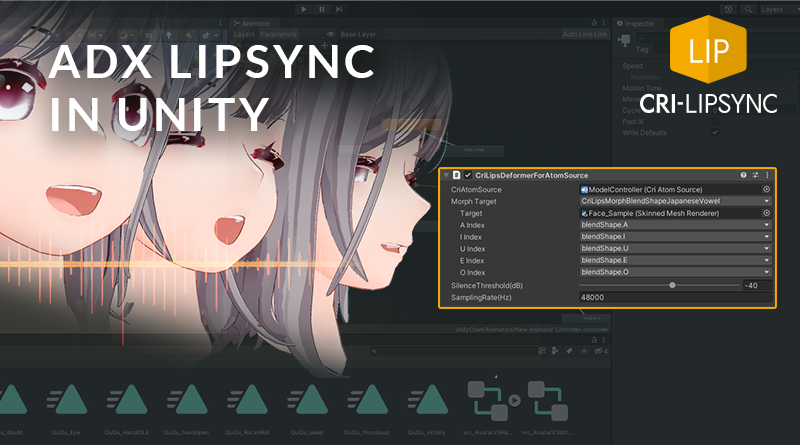

Using ADX LipSync in Unity

In our previous post we described the offline generation of mouth patterns by analyzing speech data with CriLipsMake, and how artists can use them to create facial animation in their tools.

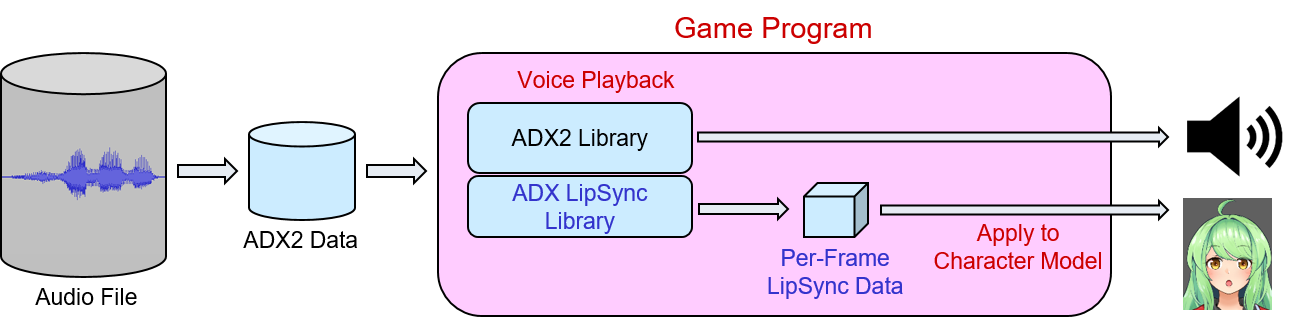

This time, we will see how such mouth patterns can also be obtained by analyzing audio played in real-time, by using the ADX LipSync Library in Unity.

Unity Plug-in

The easiest way to use ADX LipSync in Unity is via the extension package provided with the CRIWARE Unity Plugin. Its location is:

\expansion\cri_adx_lipsync\unity\plugin\cri_adxlipsync_unity_plugin.unitypackage.

The plugin operation is simple and consists in connecting a sound source – via a CriAtomSource – to a dedicated CriLipsDeformer component. This component analyzes the sound in real-time and controls the mouth movements of a character during audio playback.

Character model preparation

The character model has to be prepared in advance in order for its mouth shape to be controllable from the ADX LipSync data. Blend shapes created in an external tool such as Maya or Blender can be used, as well as those made directly with the Unity Animator functions.

Once your model is ready, it can be imported directly into your scene. For instance, the character model used for this post has nine blend shapes corresponding to the following two mouth-shaped morph targets generated by ADX LipSync:

- Vertical opening, side opening, side closing, tongue height.

- 5 Japanese vowels A, I, U, E, O.

This is what the blend shapes look like individually:

Simple implementation

Here is a simple way to control the motion of a character’s mouth with ADX LipSync:

- In the Scene, create a new Game Object, with a CRI Atom Source component.

- Fill in the Cue Sheet and Cue Name properties of the component, and enable Play On Start.

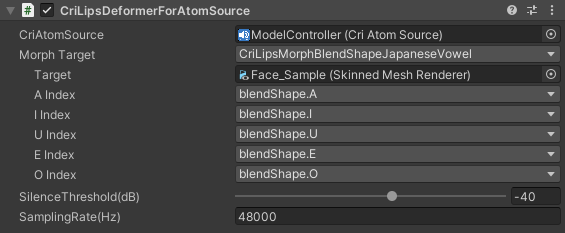

- Then, under the CRI Atom Source, add a CriLipsDeformerForAtomSource component.

The properties of the component are as following:

- CriAtomSource: Select the CriAtomSource that will provide the audio to be analyzed.

- Morph Target: Choose the type of blend shape used (Unity Animator or standard blend shape) between the two mouth-shaped morph targets (Width/Height or Japanese vowels). The options are:

- CriLipsMorphAnimatorJapaneseVowel

- CriLipsMorphAnimatorWidthHeight

- CriLipsMorphBlendShapeJapaneseVowel

- CriLipsMorphBlendShapeWidthHeight

- Target: Select the character model you want to control.

- Index or Hash: Way to link the morph targets to the character’s blend shape.

- SilenceThreshold(dB): Threshold value below which the signal is considered silent.

- SamplingRate(Hz): Sampling frequency of the audio data to be analyzed.

Launch the scene, and you should now see the character’s lips move in sync with the audio being played.

Because the ADX LipSync library analyzes audio in real time, the CPU load can become quite high when multiple audio streams are processed simultaneously. It is therefore recommended to perform lip-sync on a maximum of two characters at a time. Of course, the CriLipsAtomAnalyzer, which performs the analysis, can be dynamically attached and detached from a CriAtomExPlayer, which allows to work around this limitation.

Now you have all the tools you need to create high-quality facial animation and lip-sync for your game, either offline or in real time!