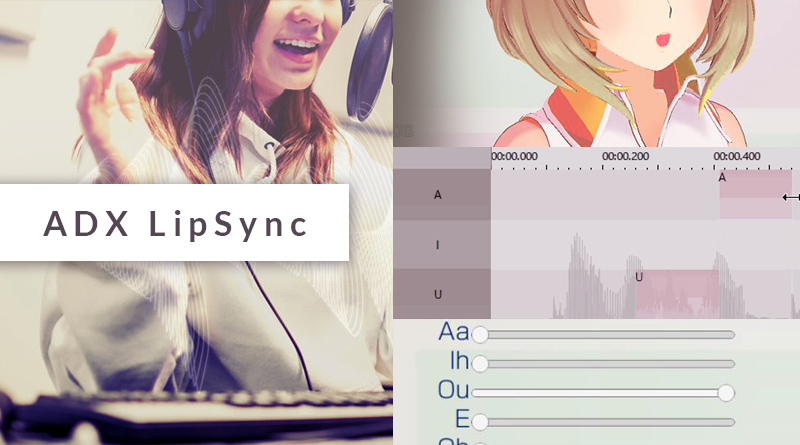

ADX LipSync

At CEDEC 2019 – the Japanese game developers conference – we introduced our new lipsync technology “CRI ADX® LipSync”, which is due to be released this fall.

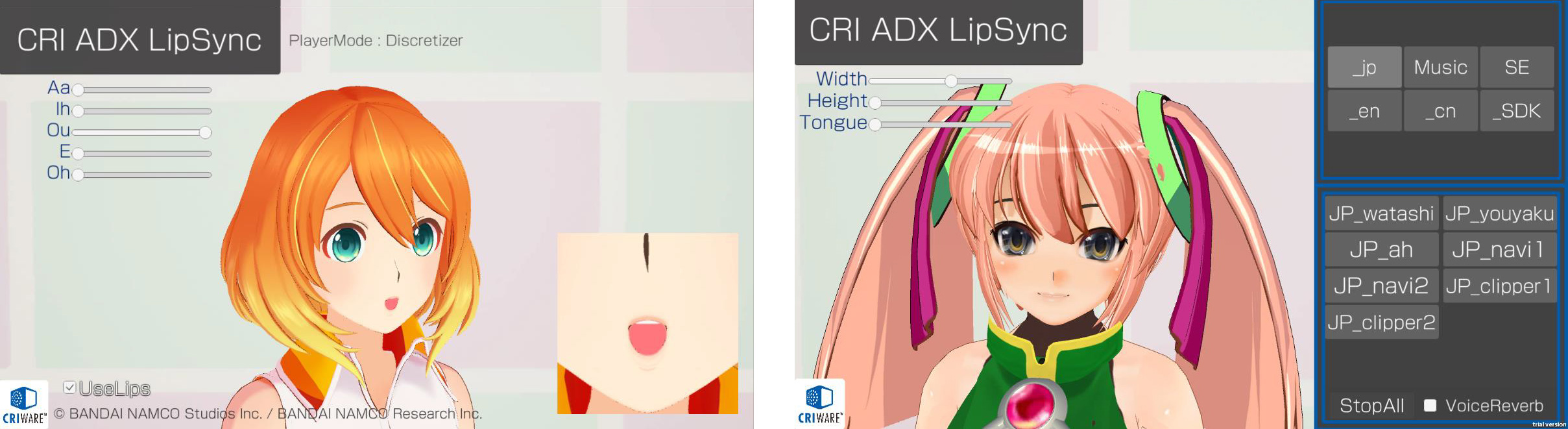

ADX LipSync uses a unique combination of sound analysis and machine learning algorithms to automatically generate mouth patterns that match your characters dialogue lines. These patterns contain information about the opening of the mouth, position of the lips and tongue. They are accurately generated independently from the type of voice, its pitch, or the age of the speaker. Moreover, ADX Lipsync is language-independent; which means that it can generate mouth patterns for various languages without having to provide dictionaries, which streamlines greatly the development of multilingual games.

ADX LipSync can also work in real-time. For example, it is possible to generate mouth patterns from a microphone input, making ADX LipSync a great solution when animating avatars in voice chat applications. The mouth patterns are output in a format that can be easily incorporated into various data models. For example, it supports both 2D / 3D for a richer game experience in a wide range of game genres.

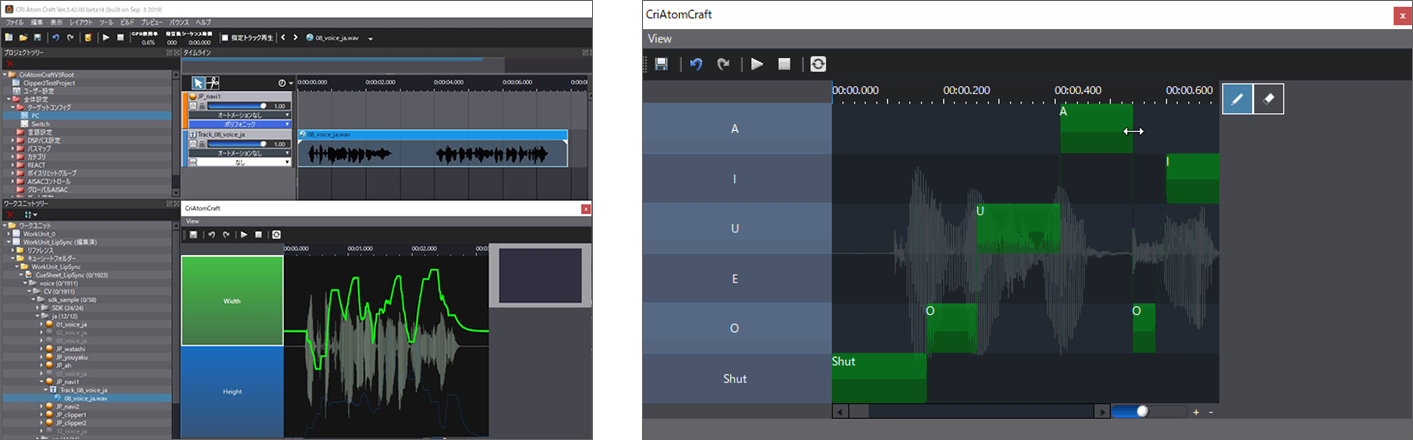

Finally, ADX LipSync integrates perfectly with our game audio middleware solution, ADX2. The mouth patterns can be edited directly in AtomCraft, ADX2’s authoring tool and played back at run-time in synch with the audio.

Do not hesitate to contact us for further information about this new groundbreaking technology!