Interview with Dom Beken

In this special blog post, we had the opportunity to interview Dom Beken: arranger, producer, composer, musician and head of audio at Snap Finger Click. Currently touring with Nick Mason’s Saucerful of Secrets, Dom graciously offered up some of his time to chronicle his audio journey and shed some light on the tricks he employs when creating soundtracks for games.

How did you get into doing audio for games?

Glad you asked, as I think this is a funny story… Since around 2004, I had thought that game audio, and especially music, would be a creative outlet for me and I might even be ok at doing it. I was producing and co-writing with many interesting artists while making my bread and butter with TV adverts. I trawled the internet and my console for game music credits and found almost nothing. I wrote to game studios and publishers with my CV and a showreel and the response, naturally enough, was tumbleweed…

At the same time, I was recording and playing with The Orb, and I also had a project called High Frequency Bandwidth (HFB) as a “side project” with Alex Paterson; both acts toured on and off, but fairly often in Japan. One guy turned up regularly at our Japan shows and, being great company, often got himself backstage and we became friends. Sometime later he messaged me to say that he liked our recent HFB album and, although never mentioning it, ran a game studio that hoped to use the album on a forthcoming game. That turned out to be Dylan Cuthbert (Q Games/PixelJunk), so I agreed on the condition that I could deliver the album designed as dynamic music, which could interact with the player. That way, I could get a shot at doing some actual game music!

The rest is history…

Your bio mentions being a ProTools programmer, and you are attributed as a programmer with Nick Mason’s Saucerful of Secrets. What does this entail?

Really, I play keyboards and sing backing vocals for NMSoS (Nick Mason’s Saucerful of Secrets). Rick Wright used a heck of a lot of different instruments with Pink Floyd and I wanted to get as many different genuine sounds as possible – always played live. It makes my keyboard rig quite complicated and it took a lot of time to put together.

There’s also loads of audio and MIDI over IP mixed up with analogue sources and effects plus a tonne of controllers… and nearly all of it has to be duplicated for redundancy too. I think someone in the office took pity and added “programmer” to one of my credits somewhere…

Did this experience help you in composing for video games?

Not really – Saucerful is an incredibly live show. There are no backing tracks, loop, or click tracks impacting on the performance. Nick Mason is our drummer – who’d want him tied to a click?! However, playing live with HFB and The Orb was hugely influential. Trying to present a studio project live when it has just one or two musicians multi-tracked is practically impossible, so we decided to use technology to re-mix the music on the fly – very much like dynamic game music.

What would you say some of the key differences are between composing for linear media vs composing for video games?

Composing for video games can take away one of the composer’s best weapons – telling a story over a specific timeframe. Sometimes you just don’t know when a particular scene will end or change, so you’re robbed of an easy way to build drama. That makes you really analyse how drama in music works! I’m usually in charge of all audio on the recent games I’ve done, so I don’t get a nice, neat brief of what’s needed from someone else – I have to decide from scratch how the music will work. How I approach that depends hugely on what the style or genre of music will be. Generally though with game music, I’m thinking all the time about texture and mood, and how stems from the same piece of music can be layered in different combinations to achieve varying harmonic and rhythmic textures – without breaking the musical flow.

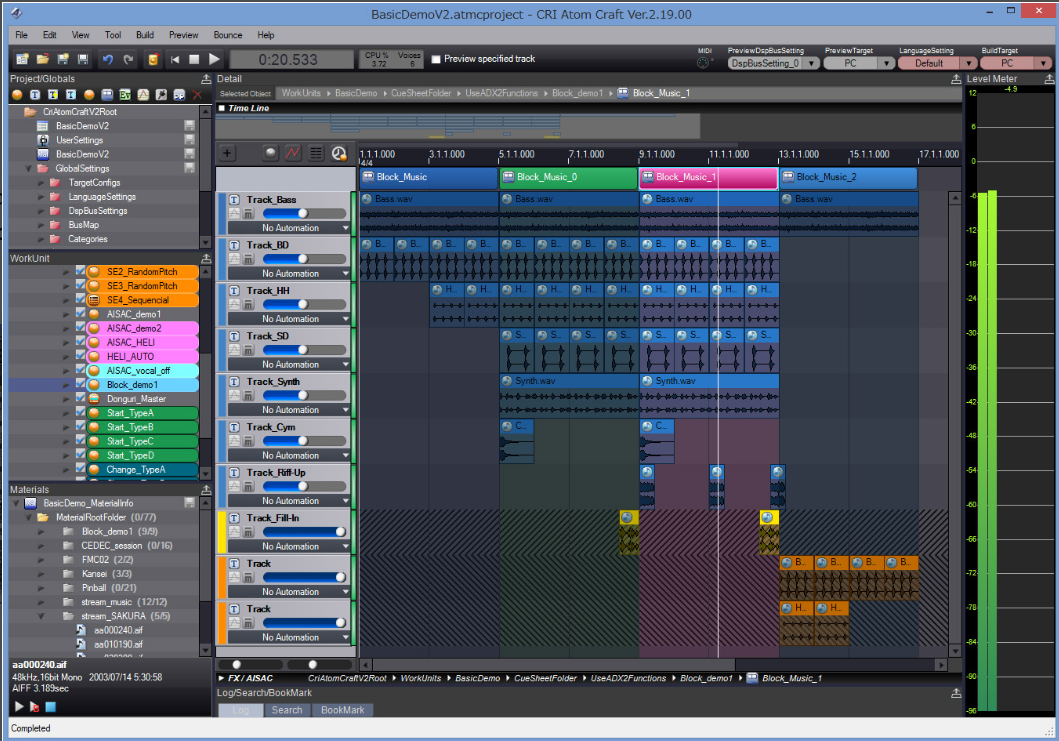

How does working in Atom Craft compare to working in a traditional DAW?

Using Atom Craft is, of course, more complex than a traditional DAW, purely because of the potential for gameplay interactivity that it offers. I definitely always start in a conventional DAW (Logic Pro and Ableton Live for MIDI programming, and ProTools for mixing) to create the base elements and use Atom Craft as a tool for controlling gameplay behaviour. I like to have loops, transitions, stems and all those elements set and tested in ProTools, using gameplay footage first, before exporting to Atom Craft. Once there, I find it more analogous to preparing Ableton for a live DJ set – setting up combinations of sounds and transitions which hang off game events instead of triggering cells.

What do you like about Cri Middleware in particular?

We use Sofdec and ADX2. They’re a powerful combination for audio and video, and although it does a very different job, I find that Atom Craft has been given a UI which is as familiar as possible to a traditional sound engineer, both within Cues and the mixer.

Composing for games often requires an adaptive approach, such as vertical layering or horizontal resequencing. What are some of your favourite approaches to dynamic/adaptive music, and why?

There are a couple of musical tricks I really like. One is to change the harmonic content by having musical parts which change the chords of another part when combined. At its simplest, this might be something like a bass part which moves away from root notes and changes the perceived chord being played in the other part. This triggers a switch that keeps the music feeling familiar, but it creates a strange feeling within the player, and they are unaware of how or why.

Rhythm is another fun way to mess with people’s heads. Having one part which plays triplets, or even dotted quavers over one another can give you two parts which separately sound as if they are in very different tempos (if you are careful about where the accents fall). Both tricks can give you a lot of different musical combinations from a relatively small audio payload.

How do you handle different tempos and keys when composing an interactive/dynamic piece of music? And more specifically, how do you decide on these aspects for a game like Quiz Time, compared to a more action-orientated game like PixelJunk Shooter?

PixelJunk Shooter was a bit different because Q Games definitely wanted the music from our album, albeit in a very re-mixed and re-mixable format. However, I do spend a lot of time puzzling out the relationships between different keys and tempos – it’s sort of advanced DJing for nerds! There have been some remarkably clever things done with in-game synthesis by better game audio designers than me, but my thing has always been that I use a lot of live/real instruments and approach all these things using “finished/mixed” wavs of stems and loops etc. I hope one day there will be a Middleware with the power of something like Ableton, that can mangle, granularise, and shift pitch – all in-game and in real-time (if we can persuade the engineering teams to let audio hog all that RAM and CPU time!).