CRI LipSync Alive Released

We are pleased to announce that CRI LipSync Alive is now available worldwide. Recently showcased at Unreal Fest 2025 in Tokyo, it is an advanced version of our existing lip-sync middleware, CRI LipSync, that analyzes speech to generate mouth movements for characters.

It relies on machine learning to generate natural lip-sync animations for any spoken language, meeting the growing demand for digital characters that speak in games and enterprise applications.

Machine Learning-Driven Speech Analysis for Any Language

CRI LipSync Alive uses machine learning based on a Transformer architecture to achieve highly accurate speech analysis.

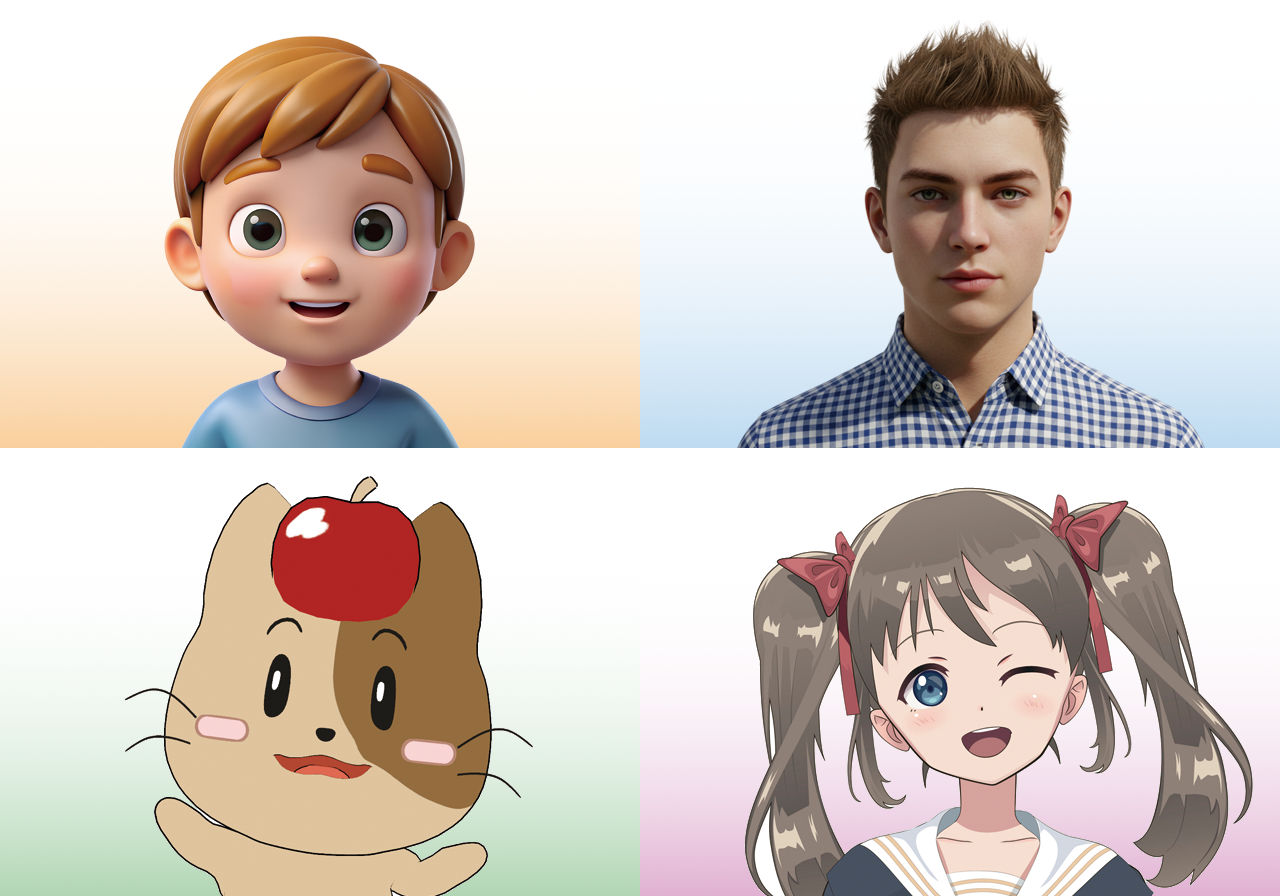

Instead of the five simple mouth shapes used previously (“a-i-u-e-o”), it now outputs up to 14 animation curves representing five vowels and nine consonants, capturing the mouth movements needed to simulate a broad range of languages.

The system also adjusts for emotion-driven mouth openness, enabling both realistic 3D characters and stylized 2D characters to speak expressively.

Developers can also edit and fine-tune the generated results to meet their production needs.

For Games and Beyond

As digital avatars and AI assistants become more common, accurate lip-sync across languages is increasingly important in both entertainment and enterprise settings.

We have been providing lip-sync technology for about 25 years, not only to the game industry but also to a range of applications for VTubers and live audio-to-animation systems.

Like the current CRI LipSync tool, CRI LipSync Alive is expected to support real-time analysis in the future, and research is ongoing to expand natural expression capabilities, which will benefit all these applications.

Do not hesitate to contact us if you want more information about CRI LipSync Alive!