ADX Essential Terms [Part 2]

This is the second part of our lexicon that explains the key terms and fundamental concepts used by ADX. If you are new to our game audio middleware – or just need a quick refresher – check this 3-part article! In the first part, we introduced the terminology around project structure, audio file management, and the different types of Cues available.

Advanced Cue structures

From the programmer’s point of view, the Cues are the sound objects triggered from the game code. Internally, though, their structure and behaviour can be as complex as the sound designer needs.

Cue Link

A Cue can reference other Cues from the same Cue Sheet. This is done by dropping the referenced Cue onto a Track, which creates a Cue Link. It allows the creation of audio components that can be shared by different Cues, or to break up complex Cues into smaller Cues to keep them easily readable and maintainable.

External Cue Links must be created if you want to reference Cues that are in other Cue Sheets. In this case, the reference can be either the name or the ID of the Cue from the other Cue Sheet.

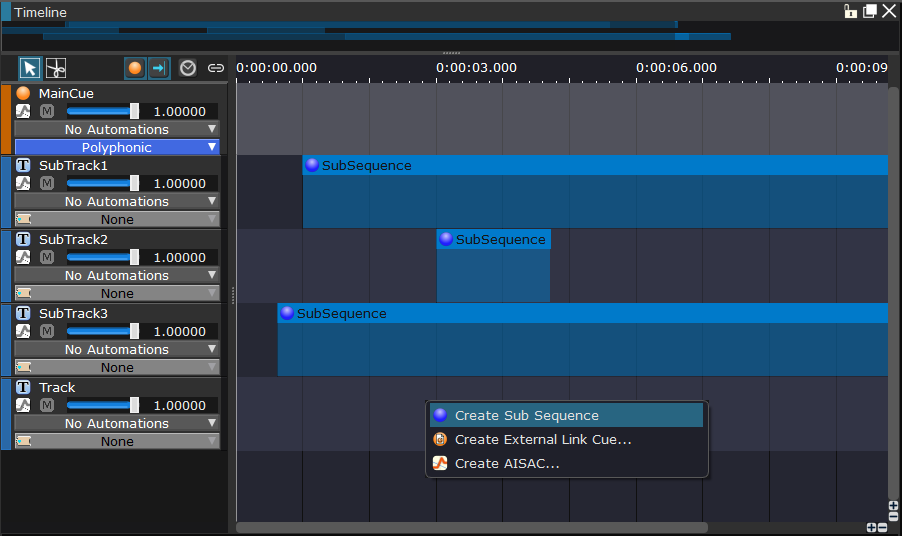

SubSequence

This is another way to create complex Cues while keeping them readable. It is possible to add a SubSequence to a Track. SubSequences can contain several Tracks and can have the same types as Cues. In addition, their Tracks can have their own SubSequences, allowing for several levels of nesting. Unlike with Cue Links, which are simply references to external Cue data, the SubSequence tracks are actually part of the Cue.

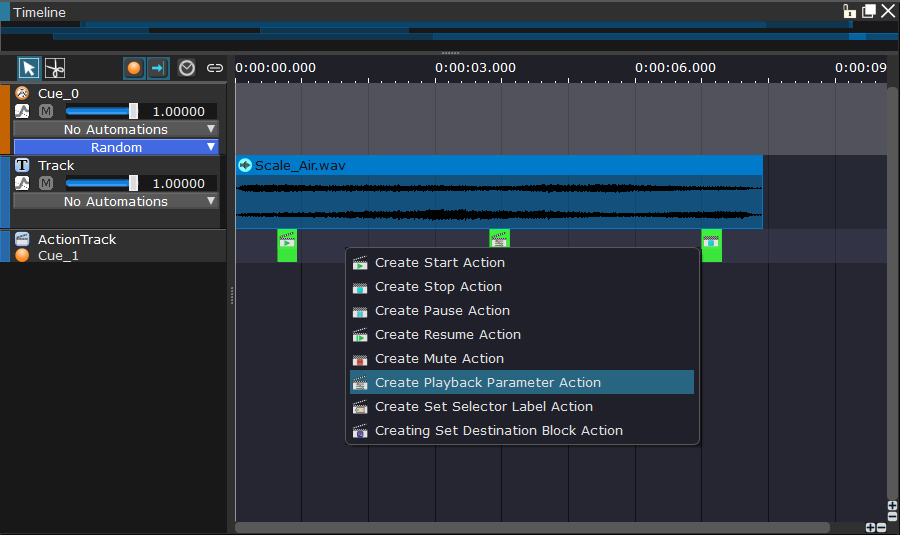

Action Track

These are special tracks with markers on them that will trigger specific Actions when the playback reaches them. Various actions are available, allowing you to start, stop, pause, or update the parameters of other Cues, for example. This makes it possible for a sound designer to create complex sonic behaviors without the help of an audio programmer.

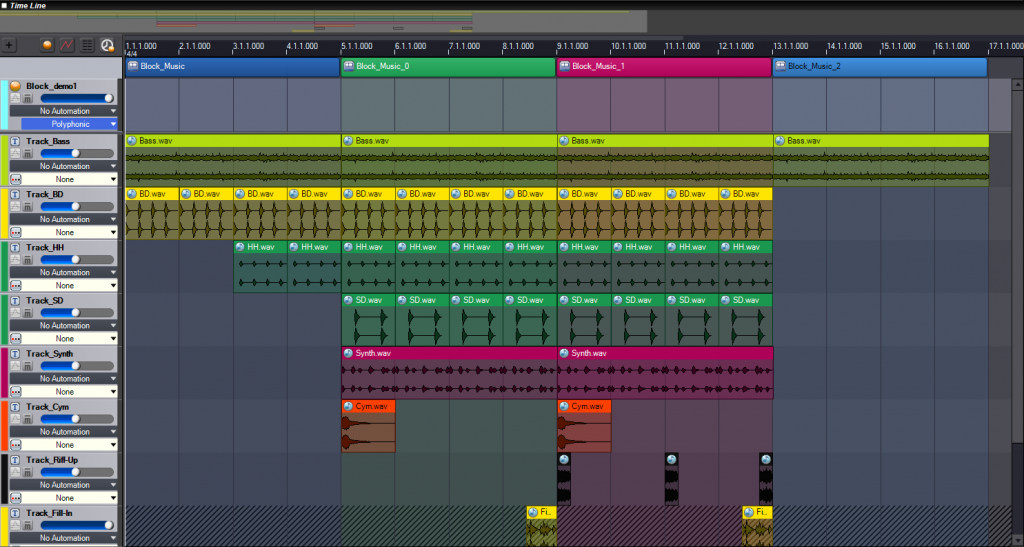

Block Playback

Blocks are used to divide the timeline of a Cue into different sections (therefore, a block references the data of several tracks). Transition rules between the blocks (synchronization, transition division number, etc.) can be designed using the tool. Block Playback is especially useful for interactive music implementation.

Real-time updates

The 2 last two points above – using Action Tracks and Block Playbacks – will change the Cue playback behavior in real-time. Here are more ways to alter the sound of the Cue while it is playing.

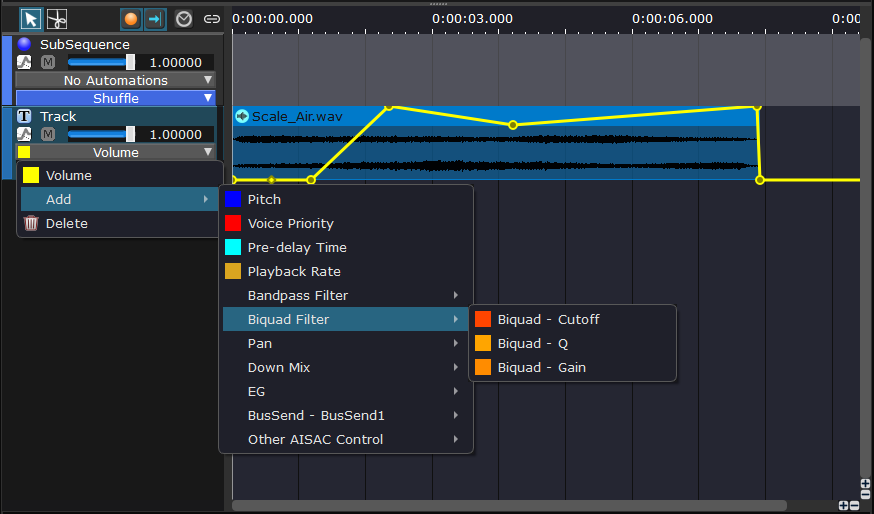

Track Automation

Track automation allows you to create curves that will make track parameters (such as volume, pitch, filter cutoff etc.) evolve over time. These curves are drawn and edited on the tracks themselves.

AISAC

This system allows for the modification of sound parameters in real-time, usually from values coming from the game. AISAC stands for Advanced Interactive Sound and Active Controller. This is equivalent to RTPC (Real-Time Parameter Control) in other tools.

An AISAC can be useful in various scenarios, such as progressively changing the sound of a vehicle engine or adjusting the volume of a crowd’s cheer. It can also be used to blend multiple sounds or modify a sound based on the listener’s position relative to the emitter.

AISAC Control

In the game engine, a game parameter is linked to an AISAC Control. The AISAC Control is simply a named identifier that allows the programmer to pass a value from the game to ADX. This AISAC Control is then assigned to one or more AISACs on a Cue (or any other compatible object, such as a Track, DSP Bus, or Categories).

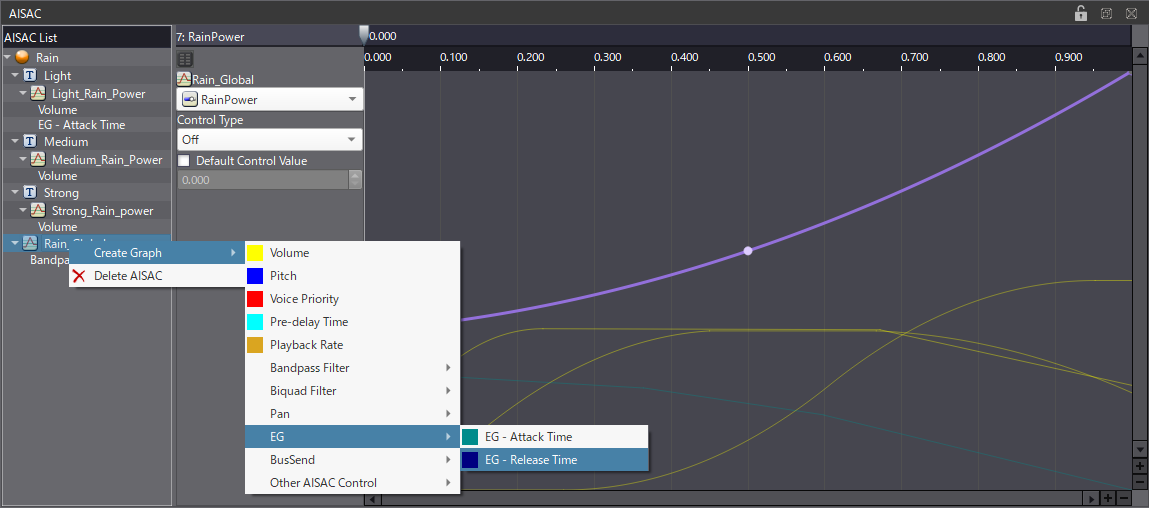

AISAC Graph

The AISAC graph determines how a value from the game will map to various sound parameters of the tracks.

Global and Category AISACs

In the first part of this lexicon, we briefly mentioned the Global AISACs, which are accessible to any Cues in the project. They are usually linked to something that will affect the game as a whole. However, there are also Category AISACs that will allow for the modification of the sound parameters of all the Cues belonging to a specific Category.

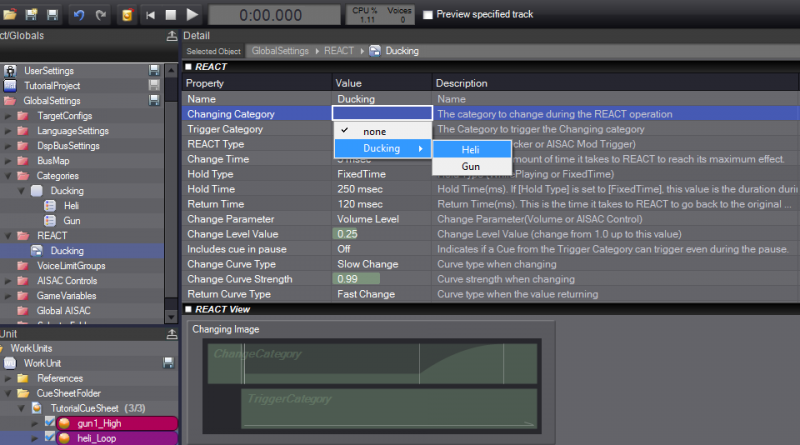

REACT

REACT is an automatic ducking system for Cues belonging to a specific category. For example, you can configure it so that the volumes of all the Cues from the SFX and Music categories are automatically lowered when a Cue from the Dialogue category is triggered, thus ensuring that the dialogue is always intelligible and no information is missed by the player.

Selectors and Selector Labels

Selectors are global objects that can take several distinct values (Selector Labels). They are used with Switch Cues to determine which Track should be played when the Cue is triggered.

For example, we could define a “Surface” Selector with “dirt”, “grass”, “concrete”, “wood”, “gravel” etc., for the Selector Labels. When the player’s character walks on a different surface in the game, the code could set the corresponding Selector Label to the Cue, triggering the correct footsteps sound when it is played.

Track Transition by Selector

This is one type of Cue we didn’t mention yet. In this case, the Track can be switched during playback when the value of a Selector is changed. A crossfade can be set when transitioning between Tracks. It is also possible to transition Tracks in sync with a beat, making it suitable for interactive music.

Cue and Track Parameters

Finally, let’s check a few of the key terms related to the Cue and Track parameters.

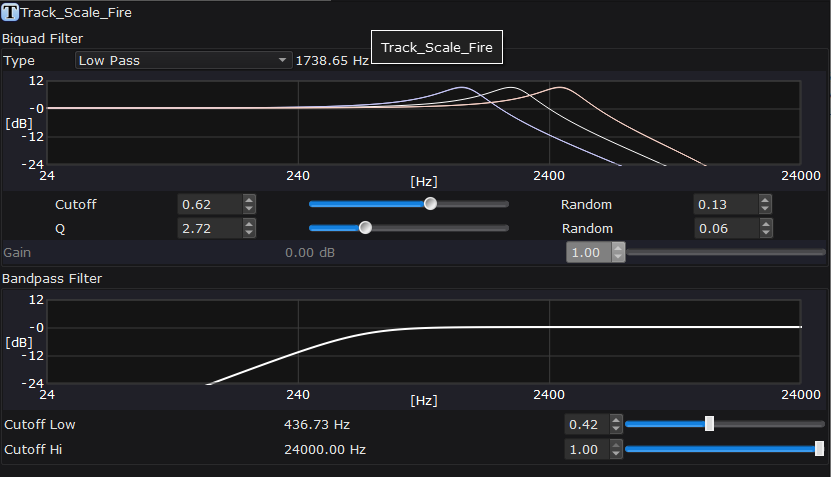

Biquad and Bandpass Filters

The output of each Track of a Cue can be processed by 2 filters, in order to cut or emphasize specific frequency ranges.

The first filter is a general Biquad that can be set to lowpass, highpass, notch, low shelf, high shelf, or peaking modes, or simply disabled. Some randomization can be applied, so that the filter’s frequency response curve is not always the same.

An additional bandpass filter is available if needed, allowing you to specify cutoff frequencies for low and high frequencies.

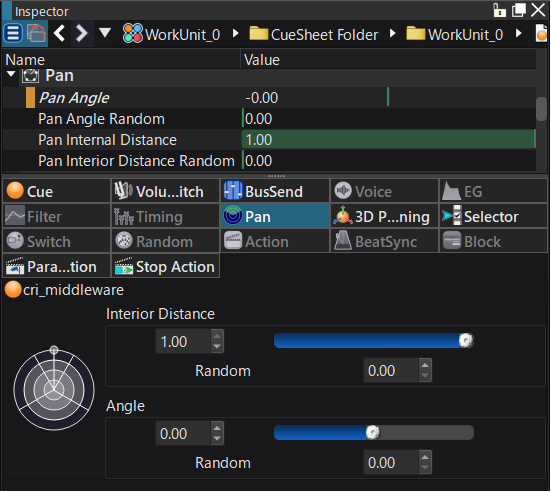

Pan 3D

Pan 3D is a function that calculates the sound positioning in a multi-speaker environment (stereo, 5.1ch, 7.1ch, 7.1.4ch, etc.) based on Cue parameters such as angle and interior distance.

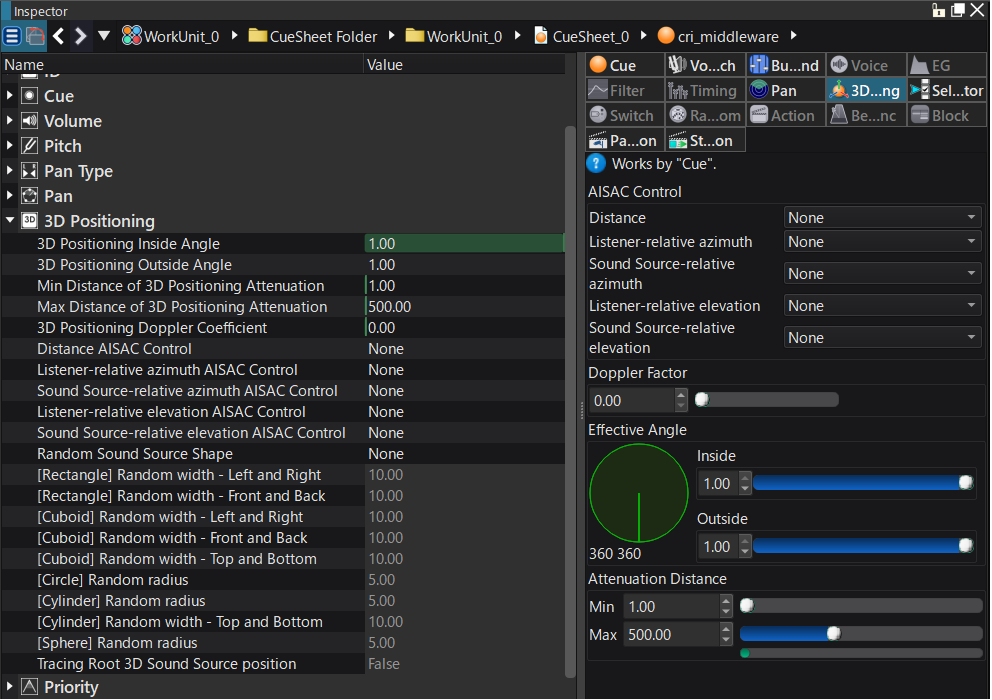

3D Positioning

3D audio positioning requires the programmer to set and update the positions of sound emitters and listeners in the 3D space of the game. By creating AISAC controls that correspond to the distance and angle from the sound emitters, it is possible to create richer 3D spatial tonal changes that match each Cue’s unique distance attenuation and angle changes.

It is also possible to specify a 2D or 3D shape within which the sound emitter posittion will be randomly positionned each time the Cue is triggered. This is useful for example to simulate environmental sounds coming from a river, a forest, etc.

Listener

The listerner is usually set to the position of the camera or the player. It is possible to also set up its velocity, as well as forward and upward vectors.

Sound emitter

Sound emitters (or “sound sources”) can be set in the game level. In addition to their 3D position, velocity, cone orientation, cone angle, and distance attenuation can also be set.

Beat Sync

The Beat Sync feature can be combined with actions and other (such as Track Transition by Selector) to determine playback and switching timing without the involvement of a programmer.

That’s it for the Cues structures, real-time updates, and parameters. There are obviously other parameters, like the voice limiting and bus sends, for instance, that we will address in the final part of this lexicon, as we focus on the last steps of the implementation in AtomCraft: mixing, previewing, profiling, and we will even explain a bit of runtime terminology!